Monitors (CRT/ LCD/LED),Memory

COURTESY :- vrindawan.in

Wikipedia

A computer monitor is an output device that displays information in pictorial or text form. A monitor usually comprises a visual display, some circuitry, a casing, and a power supply. The display device in modern monitors is typically a thin-film-transistor liquid-crystal display (TFT-LCD) with LED back lighting having replaced cold-cathode fluorescent lamp (CCFL) back lighting. Previous monitors used a cathode-ray tube (CRT) and some plasma (also called gas-plasma) displays. Monitors are connected to the computer via VGA, Digital Visual Interface (DVI), HDMI, DisplayPort, USB-C, low-voltage differential signaling (LVDS) or other proprietary connectors and signals.

Originally, computer monitors were used for data processing while television sets were used for entertainment. From the 1980s onwards, computers (and their monitors) have been used for both data processing and entertainment, while televisions have implemented some computer functionality. The common aspect ratio of televisions, and computer monitors, has changed from 4:3 to 16:10, to 16:9.

Modern computer monitors are easily interchangeable with conventional television sets and vice versa. However, as many computer monitors do not include integrated speakers nor TV Tuners (such as digital television adapters), it may not be possible to use a computer monitor as a TV set without external components.

Early electronic computers were fitted with a panel of light bulbs where the state of each particular bulb would indicate the on/off state of a particular register bit inside the computer. This allowed the engineers operating the computer to monitor the internal state of the machine, so this panel of lights came to be known as the ‘monitor’. As early monitors were only capable of displaying a very limited amount of information and were very transient, they were rarely considered for program output. Instead, a line printer was the primary output device, while the monitor was limited to keeping track of the program’s operation.

Computer monitors were formerly known as visual display units (VDU), particularly in British English. This term mostly fell out of use by the 1990s.

Multiple technologies have been used for computer monitors. Until the 21st century most used cathode-ray tubes but they have largely been superseded by LCD monitors.

The first computer monitors used cathode-ray tubes (CRTs). Prior to the advent of home computers in the late 1970s, it was common for a video display terminal (VDT) using a CRT to be physically integrated with a keyboard and other components of the system in a single large chassis. The display was monochromatic and far less sharp and detailed than on a modern flat-panel monitor, necessitating the use of relatively large text and severely limiting the amount of information that could be displayed at one time. High-resolution CRT displays were developed for the specialized military, industrial and scientific applications but they were far too costly for general use; wider commercial use became possible after the release of a slow, but affordable Tektronix 4010 terminal in 1972.

Some of the earliest home computers (such as the TRS-80 and Commodore PET) were limited to monochrome CRT displays, but color display capability was already a possible feature for a few MOS 6500 series-based machines (such as introduced in 1977 Apple II computer or Atari 2600 console), and the color output was a speciality of the more graphically sophisticated Atari 800 computer, introduced in 1979. Either computer could be connected to the antenna terminals of an ordinary color TV set or used with a purpose-made CRT color monitor for optimum resolution and color quality. Lagging several years behind, in 1981 IBM introduced the Color Graphics Adapter, which could display four colors with a resolution of 320 × 200 pixels, or it could produce 640 × 200 pixels with two colors. In 1984 IBM introduced the Enhanced Graphics Adapter which was capable of producing 16 colors and had a resolution of 640 × 350.

By the end of the 1980s color CRT monitors that could clearly display 1024 × 768 pixels were widely available and increasingly affordable. During the following decade, maximum display resolutions gradually increased and prices continued to fall. CRT technology remained dominant in the Personal computer(PC) monitor market into the new millennium partly because it was cheaper to produce and offered to view angles close to 180°. CRTs still offer some image quality advantages over LCDs but improvements to the latter have made them much less obvious. The dynamic range of early LCD panels was very poor, and although text and other motionless graphics were sharper than on a CRT, an LCD characteristic known as pixel lag caused moving graphics to appear noticeably smeared and blurry.

There are multiple technologies that have been used to implement liquid-crystal displays (LCD). Throughout the 1990s, the primary use of LCD technology as computer monitors was in laptops where the lower power consumption, lighter weight, and smaller physical size of LCDs justified the higher price versus a CRT. Commonly, the same laptop would be offered with an assortment of display options at increasing price points: (active or passive) monochrome, passive color, or active matrix color (TFT). As volume and manufacturing capability have improved, the monochrome and passive color technologies were dropped from most product lines.

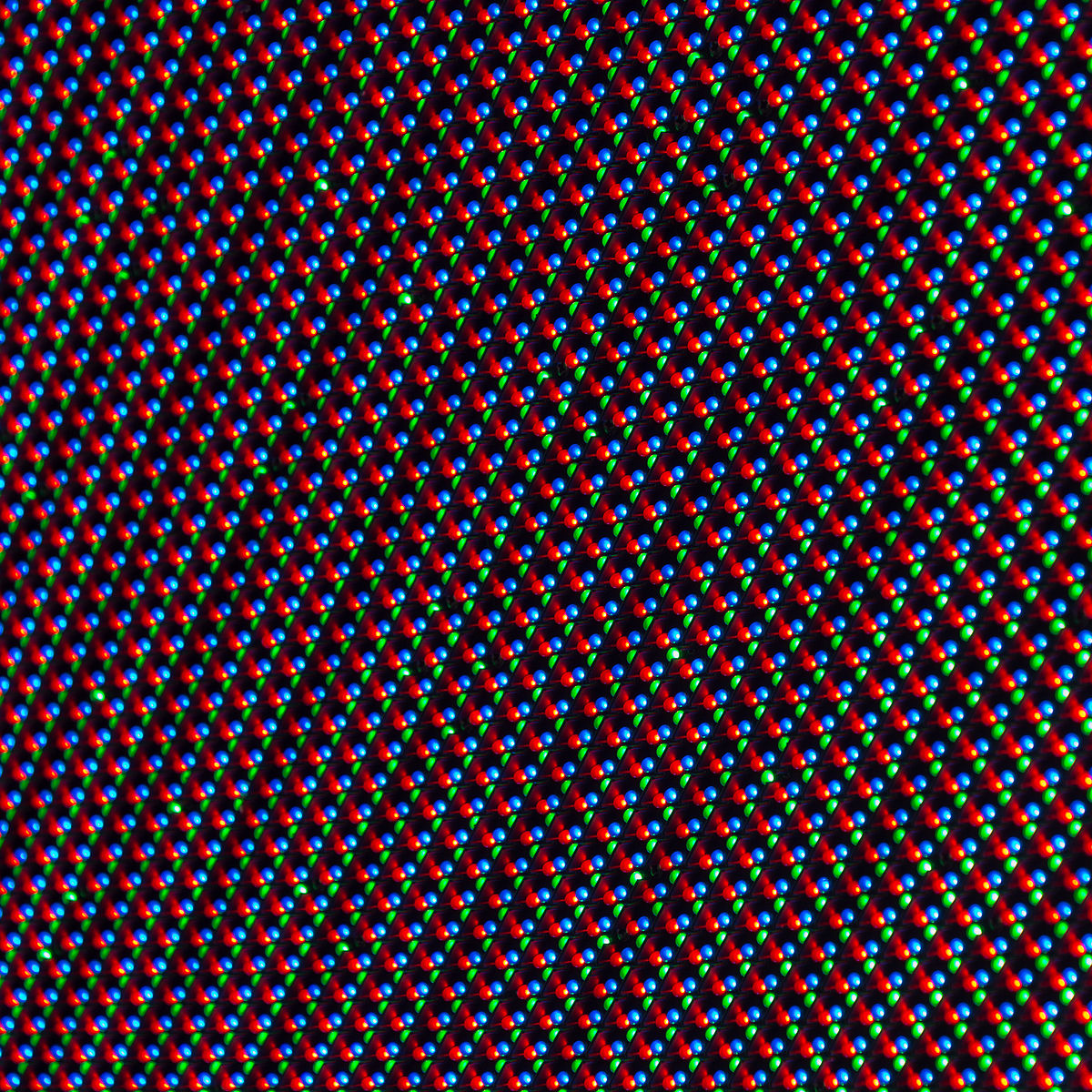

TFT-LCD is a variant of LCD which is now the dominant technology used for computer monitors.

The first standalone LCDs appeared in the mid-1990s selling for high prices. As prices declined over a period of years they became more popular, and by 1997 were competing with CRT monitors. Among the first desktop LCD computer monitors was the Eizo FlexScan L66 in the mid-1990s, the SGI 1600SW, Apple Studio Display and the ViewSonic VP140 in 1998. In 2003, TFT-LCDs outsold CRTs for the first time, becoming the primary technology used for computer monitors. The main advantages of LCDs over CRT displays are that LCDs consume less power, take up much less space, and are considerably lighter. The now common active matrix TFT-LCD technology also has less flickering than CRTs, which reduces eye strain. On the other hand, CRT monitors have superior contrast, have a superior response time, are able to use multiple screen resolutions natively, and there is no discernible flicker if the refresh rate is set to a sufficiently high value. LCD monitors have now very high temporal accuracy and can be used for vision research.

High dynamic range (HDR) has been implemented into high-end LCD monitors to improve color accuracy. Since around the late 2000s, widescreen LCD monitors have become popular, in part due to television series, motion pictures and video games transitioning to high-definition (HD), which makes standard-width monitors unable to display them correctly as they either stretch or crop HD content. These types of monitors may also display it in the proper width, by filling the extra space at the top and bottom of the image with a solid color (“letter boxing”). Other advantages of widescreen monitors over standard-width monitors is that they make work more productive by displaying more of a user’s documents and images, and allow displaying toolbars with documents. They also have a larger viewing area, with a typical widescreen monitor having a 16:9 aspect ratio, compared to the 4:3 aspect ratio of a typical standard-width monitor.

An LCD projector is a type of video projector for displaying video, images or computer data on a screen or other flat surface. It is a modern equivalent of the slide projector or overhead projector. To display images, LCD (liquid-crystal display) projectors typically send light from a metal-halide lamp through a prism or series of dichroic filters that separates light to three polysilicon panels – one each for the red, green and blue components of the video signal. As polarized light passes through the panels (combination of polarizer, LCD panel and analyzer), individual pixels can be opened to allow light to pass or closed to block the light. The combination of open and closed pixels can produce a wide range of colors and shades in the projected image.

Metal-halide lamps are used because they output an ideal color temperature and a broad spectrum of color. These lamps also have the ability to produce an extremely large amount of light within a small area; current projectors average about 2,000 to 15,000 American National Standards Institute (ANSI) lumens.

Other technologies, such as Digital Light Processing (DLP) and liquid crystal on silicon (LCOS) are also becoming more popular in modestly priced video projection.

Because they use small lamps and the ability to project an image on any flat surface, LCD projectors tend to be smaller and more portable than some other types of projection systems. Even so, the best image quality is found using a blank white, grey, or black (which blocks reflected ambient light) surface, so dedicated projection screens are often used.

Perceived color in a projected image is a factor of both projection surface and projector quality. Since white is more of a neutral color, white surfaces are best suited for natural color tones; as such, white projection surfaces are more common in most business and school presentation environments.

However, darkest black in a projected image is dependent on how dark the screen is. Because of this, some presenters and presentation-space planners prefer gray screens, which create higher-perceived contrast. The trade-off is that darker backgrounds can throw off color tones. Color problems can sometimes be adjusted through the projector settings, but may not be as accurate as they would on a white background.

A LED display is a flat panel display that uses an array of light-emitting diodes as pixels for a video display. Their brightness allows them to be used outdoors where they are visible in the sun for store signs and billboards. In recent years, they have also become commonly used in destination signs on public transport vehicles, as well as variable-message signs on highways. LED displays are capable of providing general illumination in addition to visual display, as when used for stage lighting or other decorative (as opposed to informational) purposes. LED displays can offer higher contrast ratios than a projector and are thus an alternative to traditional projection screens, and they can be used for large, uninterrupted (without a visible grid arising from the bezels of individual displays) video walls. microLED displays are LED displays with smaller LEDs, which poses significant development challenges.

Light-emitting diodes (LEDs) came into existence in 1962 and were primarily red in color for the first decade. The first practical LED was invented by Nick Holonyak in 1962 while he was at General Electric.

The first practical LED display was developed at Hewlett-Packard (HP) and introduced in 1968. Its development was led by Howard C. Borden and Gerald P. Pighini at HP Associates and HP Labs, who had engaged in research and development (R&D) on practical LEDs between 1962 and 1968. In February 1969, they introduced the HP Model 5082-7000 Numeric Indicator. It was the first LED device to use integrated circuit (integrated LED circuit) technology, and the first intelligent LED display, making it a revolution in digital display technology, replacing the Nixie tube and becoming the basis for later LED displays.

Early models were monochromatic by design. The efficient Blue LED completing the color triad did not commercially arrive until the late 1980s.

In the late 1980s, Aluminium Indium Gallium Phosphide LEDs arrived. They provided an efficient source of red and amber and were used in information displays. However, it was still impossible to achieve full colour. The available “green” was hardly green at all – mostly yellow, and an early blue had excessively high power consumption. It was only when Shuji Nakumura, then at Nichia Chemical, announced the development of the blue (and later green) LED based on Indium Gallium Nitride, that possibilities opened for big LED video displays.

The entire idea of what could be done with LED was given an early shake up by Mark Fisher’s design for U2’s PopMart Tour of 1997. He realized that with long viewing distances, wide pixel spacing could be used to achieve very large images, especially if viewed at night. The system had to be suitable for touring so an open mesh arrangement that could be rolled up for transport was used. The whole display was 52m (170ft) wide and 17m (56ft) high. It had a total of 150,000 pixels. The company that supplied the LED pixels and their driving system, SACO Technologies of Montreal, had never engineered a video system before, previously building mimic panels for power station control rooms.

Today, large displays use high-brightness diodes to generate a wide spectrum of colors. It took three decades and organic light-emitting diodes for Sony to introduce an OLED TV, the Sony XEL-1 OLED screen which was marketed in 2009. Later, at CES 2012, Sony presented Crystal LED, a TV with a true LED-display, in which LEDs are used to produce actual images rather than acting as backlighting for other types of display, as in LED-backlit LCDs which are commonly marketed as LED TVs.