Computer Assembly Set By Set Innovation

COURTESY :- vrindawan.in

Wikipedia

A computer is a digital electronic machine that can be programmed to carry out sequences of arithmetic or logical operations (computation) automatically. Modern computers can perform generic sets of operations known as programs. These programs enable computers to perform a wide range of tasks. A computer system is a “complete” computer that includes the hardware, operating system (main software), and peripheral equipment needed and used for “full” operation. This term may also refer to a group of computers that are linked and function together, such as a computer network or computer cluster.

A broad range of industrial and consumer products use computers as control systems. Simple special-purpose devices like microwave ovens and remote controls are included, as are factory devices like industrial robots and computer-aided design, as well as general-purpose devices like personal computers and mobile devices like smartphones. Computers power the Internet, which links billions of other computers and users.

Early computers were meant to be used only for calculations. Simple manual instruments like the abacus have aided people in doing calculations since ancient times. Early in the Industrial Revolution, some mechanical devices were built to automate long tedious tasks, such as guiding patterns for looms. More sophisticated electrical machines did specialized analog calculations in the early 20th century. The first digital electronic calculating machines were developed during World War II. The first semiconductor transistors in the late 1940s were followed by the silicon-based MOSFET (MOS transistor) and monolithic integrated circuit (IC) chip technologies in the late 1950s, leading to the microprocessor and the microcomputer revolution in the 1970s. The speed, power and versatility of computers have been increasing dramatically ever since then, with transistor counts increasing at a rapid pace (as predicted by Moore’s law), leading to the Digital Revolution during the late 20th to early 21st centuries.

Conventionally, a modern computer consists of at least one processing element, typically a central processing unit (CPU) in the form of a microprocessor, along with some type of computer memory, typically semiconductor memory chips. The processing element carries out arithmetic and logical operations, and a sequencing and control unit can change the order of operations in response to stored information. Peripheral devices include input devices (keyboards, mice, joystick, etc.), output devices (monitor screens, printers, etc.), and input/output devices that perform both functions (e.g., the 2000s-era touchscreen). Peripheral devices allow information to be retrieved from an external source and they enable the result of operations to be saved and retrieved.

According to the Oxford English Dictionary, the first known use of computer was in a 1613 book called The Yong Mans Gleanings by the English writer Richard Brath wait: “I haue [sic] read the truest computer of Times, and the best Arithmetician that euer [sic] breathed, and he redu ceth thy dayes into a short number.” This usage of the term referred to a human computer, a person who carried out calculations or computations. The word continued with the same meaning until the middle of the 20th century. During the latter part of this period women were often hired as computers because they could be paid less than their male counterparts. By 1943, most human computers were women.

The Online Etymology Dictionary gives the first attested use of computer in the 1640s, meaning ‘one who calculates’; this is an “agent noun from compute (v.)”. The Online Etymology Dictionary states that the use of the term to mean “‘calculating machine’ (of any type) is from 1897.” The Online Etymology Dictionary indicates that the “modern use” of the term, to mean ‘programmable digital electronic computer’ dates from “1945 under this name; [in a] theoretical [sense] from 1937, as Turing machine“.

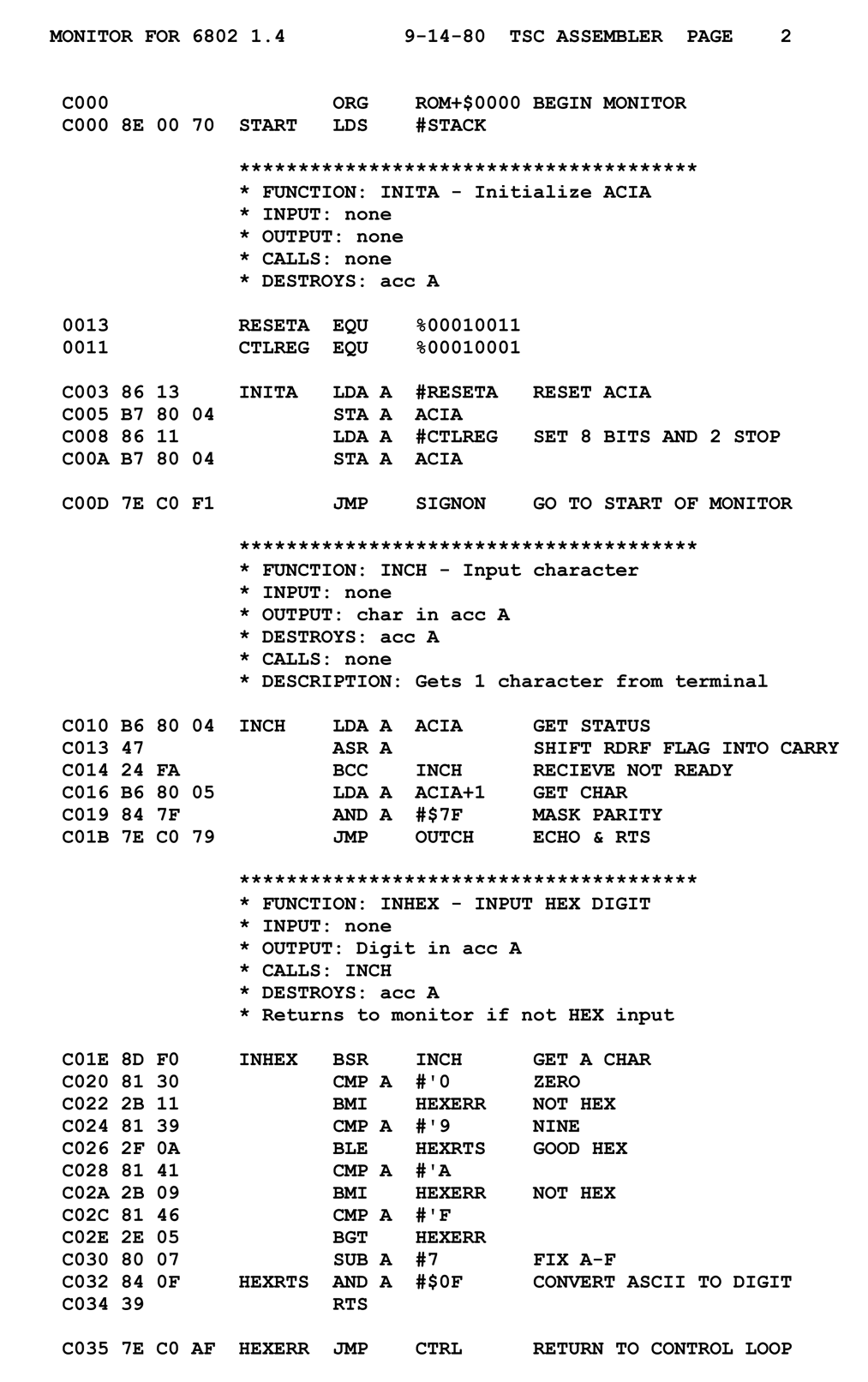

In computer programming, assembly language (or assembler language, or symbolic machine code), often referred to simply as Assembly and commonly abbreviated as ASM or asm, is any low-level programming language with a very strong correspondence between the instructions in the language and the architecture’s machine code instructions. Assembly language usually has one statement per machine instruction (1:1), but constants, comments, assembler directives, symbolic labels of, e.g., memory locations, registers, and macros are generally also supported.

Assembly code is converted into executable machine code by a utility program referred to as an assembler. The term “assembler” is generally attributed to Wilkes, Wheeler and Gill in their 1951 book The Preparation of Programs for an Electronic Digital Computer, who, however, used the term to mean “a program that assembles another program consisting of several sections into a single program”. The conversion process is referred to as assembly, as in assembling the source code. The computational step when an assembler is processing a program is called assembly time.

Because assembly depends on the machine code instructions, each assembly language is specific to a particular computer architecture.

Sometimes there is more than one assembler for the same architecture, and sometimes an assembler is specific to an operating system or to particular operating systems. Most assembly languages do not provide specific syntax for operating system calls, and most assembly languages can be used universally with any operating system, as the language provides access to all the real capabilities of the processor, upon which all system call mechanisms ultimately rest. In contrast to assembly languages, most high-level programming languages are generally portable across multiple architectures but require interpreting or compiling, much more complicated tasks than assembling.

In the first decades of computing, it was commonplace for both systems programming and application programming to take place entirely in assembly language. While still irreplaceable for some purposes, the majority of programming is now conducted in higher-level interpreted and compiled languages. In “No Silver Bullet”, Fred Brooks summarised the effects of the switch away from assembly language programming: “Surely the most powerful stroke for software productivity, reliability, and simplicity has been the progressive use of high-level languages for programming. Most observers credit that development with at least a factor of five in productivity, and with concomitant gains in reliability, simplicity, and comprehensibility.”

Today, it is typical to use small amounts of assembly language code within larger systems implemented in a higher-level language, for performance reasons or to interact directly with hardware in ways unsupported by the higher-level language. For instance, just under 2% of version 4.9 of the Linux kernel source code is written in assembly; more than 97% is written in C.

Assembly language uses a mnemonic to represent, e.g., each low-level machine instruction or opcode, each directive, typically also each architectural register, flag, etc. Some of the mnemonics may be built in and some user defined. Many operations require one or more operands in order to form a complete instruction. Most assemblers permit named constants, registers, and labels for program and memory locations, and can calculate expressions for operands. Thus, programmers are freed from tedious repetitive calculations and assembler programs are much more readable than machine code. Depending on the architecture, these elements may also be combined for specific instructions or addressing modes using offsets or other data as well as fixed addresses. Many assemblers offer additional mechanisms to facilitate program development, to control the assembly process, and to aid debugging.

Some are column oriented, with specific fields in specific columns; this was very common for machines using punched cards in the 1950s and early 1960s. Some assemblers have free-form syntax, with fields separated by delimiters, e.g., punctuation, white space. Some assemblers are hybrid, with, e.g., labels, in a specific column and other fields separated by delimiters; this became more common than column oriented syntax in the 1960s.

All of the IBM assemblers for System/360, by default, have a label in column 1, fields separated by delimiters in columns 2-71, a continuation indicator in column 72 and a sequence number in columns 73-80. The delimiter for label, opcode, operands and comments is spaces, while individual operands are separated by commas and parentheses.

A one-instruction set computer (OISC), sometimes called an ultimate reduced instruction set computer (URISC), is an abstract machine that uses only one instruction – obviating the need for a machine language opcode. With a judicious choice for the single instruction and given infinite resources, an OISC is capable of being a universal computer in the same manner as traditional computers that have multiple instructions. OISCs have been recommended as aids in teaching computer architecture and have been used as computational models in structural computing research. The first carbon nanotube computer is a 1-bit one-instruction set computer (and has only 178 transistors).

In a Turing-complete model, each memory location can store an arbitrary integer, and – depending on the model – there may be arbitrarily many locations. The instructions themselves reside in memory as a sequence of such integers.

There exists a class of universal computers with a single instruction based on bit manipulation such as bit copying or bit inversion. Since their memory model is finite, as is the memory structure used in real computers, those bit manipulation machines are equivalent to real computers rather than to Turing machines.

Currently known OISCs can be roughly separated into three broad categories:

- Bit-manipulating machines

- Transport triggered architecture machines

- Arithmetic-based Turing-complete machines

Bit-manipulating machines are the simplest class.

The Flip Jump machine has 1 instruction, a;b – flips the bit a, then jumps to b. This is the most primitive OISC, but it’s still useful. It can successfully do Math/Logic calculations, branching, pointers, and calling functions with the help of its standard library.

A bit copying machine, called Bit Bit Jump, copies one bit in memory and passes the execution unconditionally to the address specified by one of the operands of the instruction. This process turns out to be capable of universal computation (i.e. being able to execute any algorithm and to interpret any other universal machine) because copying bits can conditionally modify the code that will be subsequently executed.

Another machine, called the Toga Computer, inverts a bit and passes the execution conditionally depending on the result of inversion. The unique instruction is TOGA(a,b) which stands for TOGgle a And branch to b if the result of the toggle operation is true.

Similar to BitBitJump, a multi-bit copying machine copies several bits at the same time. The problem of computational universality is solved in this case by keeping predefined jump tables in the memory.