Concept of data processing

Data processing refers to the manipulation, transformation, and analysis of raw data into meaningful and valuable information. It involves various techniques and methods to convert data into a more usable format for decision-making, reporting, analysis, and other purposes. Data processing can encompass a wide range of activities, from simple data entry and validation to complex tasks like statistical analysis and machine learning.

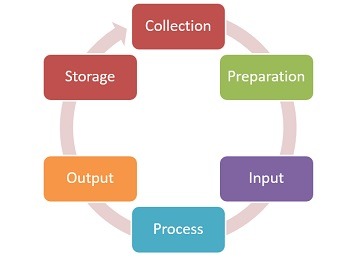

Here are the key stages and concepts involved in data processing:

- Data Collection: The process begins with collecting raw data from various sources, which could include sensors, databases, forms, surveys, websites, and more.

- Data Entry: This involves inputting the collected data into a digital system, often through manual typing or automated data ingestion processes.

- Data Validation and Cleaning: Raw data can be erroneous or incomplete. Validation checks ensure data accuracy and completeness. Cleaning involves identifying and correcting errors, inconsistencies, and outliers.

- Data Transformation: Raw data may need to be transformed into a more suitable format for analysis. This could involve converting data types, aggregating data, or merging data from multiple sources.

- Data Storage: Processed data is often stored in databases, data warehouses, or other storage systems that allow for efficient retrieval and management.

- Data Analysis: This stage involves using various techniques to extract insights and meaning from the processed data. Statistical analysis, data mining, and machine learning are common methods used in this phase.

- Data Visualization: Data is often more understandable when presented visually. Data visualization tools create charts, graphs, and other visual representations of data patterns and trends.

What is required Concept of data processing

The concept of data processing involves several key requirements to ensure that the processing of data is accurate, reliable, and serves its intended purpose effectively. These requirements are fundamental to the data processing pipeline:

- Data Quality: High-quality input data is essential for meaningful processing. Data should be accurate, complete, consistent, and relevant to the intended purpose. Data quality is maintained through data validation and cleaning processes.

- Data Security: Data security is paramount to protect sensitive information from unauthorized access, breaches, or data leaks. Encryption, access controls, and data masking are among the methods used to secure data.

- Data Privacy: Ensuring compliance with data privacy regulations and policies is crucial. Personal and sensitive data must be handled in accordance with legal requirements such as GDPR, HIPAA, or industry-specific regulations.

- Data Governance: Establishing clear data governance policies and procedures helps manage data throughout its lifecycle, from collection to disposal. This includes data ownership, stewardship, and documentation.

- Scalability: As data volumes grow, the data processing system should be able to scale horizontally or vertically to handle increased workloads efficiently.

- Real-time Processing: Some applications require real-time or near-real-time data processing to enable immediate decision-making. This necessitates low-latency processing capabilities.

- Data Integration: Often, data comes from various sources and formats. Data integration ensures that data from different sources can be combined and processed together seamlessly.

- Data Transformation: Data may need to be transformed into a format suitable for analysis or reporting. This can include data cleansing, aggregation, and normalization.

- Performance Optimization: Data processing systems should be optimized for performance to ensure that processing tasks are completed efficiently and within acceptable time frames.

- Data Storage: Choosing the right storage solutions, such as databases or data warehouses, is crucial to store processed data securely and make it accessible for analysis.

- Data Backup and Recovery: Data should be regularly backed up to prevent data loss in case of hardware failures, data corruption, or other unforeseen events. A robust recovery plan should also be in place.

- Data Documentation: Proper documentation of data sources, processing steps, and metadata is essential for maintaining data lineage and ensuring that others can understand and reproduce the data processing pipeline.

- Data Validation and Testing: Rigorous testing and validation procedures are required to verify that the data processing pipeline functions correctly. This includes unit testing, integration testing, and validation against expected outcomes.

- Data Retention: Define data retention policies that specify how long data should be kept and when it should be archived or deleted in compliance with legal requirements.

- Data Accessibility: Users who need access to processed data should have the appropriate permissions and tools to retrieve and analyze the data efficiently.

- Monitoring and Logging: Implement monitoring tools and logging mechanisms to track the performance of the data processing system and detect issues or anomalies in real time.

- Compliance: Ensure that the data processing activities adhere to industry-specific regulations, organizational policies, and ethical standards.

By addressing these requirements, organizations can establish robust data processing workflows that yield accurate insights, support informed decision-making, and maintain data integrity and security.

Who is required Concept of data processing

The concept of data processing involves the participation of various individuals, roles, and stakeholders who collectively contribute to the successful execution of data processing workflows. Here are some of the key roles and individuals involved:

- Data Analysts: Data analysts are responsible for transforming raw data into insights by applying various analytical techniques. They clean, analyze, and visualize data to identify trends and patterns.

- Data Scientists: Data scientists work on more advanced data analysis, often using machine learning and statistical modeling to extract predictive and prescriptive insights from data.

- Data Engineers: Data engineers design and build the infrastructure necessary for data processing. They develop data pipelines, manage databases, and ensure data is collected, stored, and made accessible for analysis.

- Database Administrators (DBAs): DBAs manage and maintain databases where processed data is stored. They ensure data integrity, optimize query performance, and handle database backups and security.

- Software Developers: Developers write the software and scripts that automate various stages of the data processing pipeline, from data collection to transformation and analysis.

- Data Stewards: Data stewards are responsible for overseeing the quality, security, and compliance of data throughout its lifecycle. They ensure that data governance policies are followed.

- Business Analysts: Business analysts use insights derived from data processing to make informed decisions and recommendations that align with business goals and strategies.

- Domain Experts: Experts in specific fields (e.g., healthcare, finance, marketing) provide domain knowledge that guides data processing efforts and helps interpret the results in a meaningful context.

- IT Administrators: IT administrators manage the technical infrastructure that supports data processing systems, including servers, networks, and security measures.

- Data Privacy Officers: In organizations that handle sensitive or personal data, data privacy officers ensure that data processing practices comply with relevant data protection regulations.

- Data Architects: Data architects design the overall data architecture, ensuring that data flows seamlessly between systems, databases, and applications.

- Quality Assurance (QA) Testers: QA testers validate that the data processing pipeline functions correctly, identifying and reporting any issues or bugs.

- Project Managers: Project managers oversee the planning, execution, and monitoring of data processing projects. They ensure that timelines, budgets, and resources are managed effectively.

- Compliance Officers: Compliance officers ensure that data processing practices adhere to industry regulations, organizational policies, and ethical standards.

- End Users: End users, including decision-makers and analysts, are the ones who ultimately benefit from the insights generated through data processing. Their feedback and requirements shape the entire process.

- Legal Experts: Legal experts help ensure that data processing activities comply with relevant laws, regulations, and contracts.

- Data Consumers: People who use the insights generated by data processing to make decisions, develop strategies, or perform specific tasks in their roles.

The collaboration and expertise of these various roles are essential for effective data processing. Successful data processing requires a multidisciplinary approach that brings together technical skills, domain knowledge, and a commitment to maintaining data quality and security.

How is required Concept of data processing

It seems like you’re asking about the concept of data processing. Data processing refers to the conversion of raw data into meaningful information through a series of operations or transformations. This process involves various stages and techniques to manipulate, analyze, and interpret data to extract valuable insights. Here’s an overview of the required concepts of data processing:

- Data Collection: The process begins with gathering raw data from various sources, such as sensors, databases, surveys, or web scraping. The quality and accuracy of collected data significantly impact the outcomes of data processing.

- Data Cleaning: Raw data often contain errors, inconsistencies, and missing values. Data cleaning involves identifying and rectifying these issues to ensure accurate and reliable results.

- Data Transformation: Raw data might be in different formats or structures. Data transformation involves converting data into a unified format for analysis. This might include converting data types, aggregating data, or encoding categorical variables.

- Data Integration: Organizations often have data stored in multiple systems or databases. Data integration involves combining data from different sources to create a unified view for analysis.

- Data Aggregation: Aggregating data involves summarizing and condensing large volumes of data into more manageable and informative forms. This can include generating statistics, calculating averages, and creating summaries.

- Data Analysis: Once the data is cleaned and transformed, various analytical techniques are applied to extract insights. This might involve statistical analysis, data mining, machine learning, or other advanced methods.

- Data Visualization: Visual representations of data, such as charts, graphs, and dashboards, make it easier to understand and communicate insights to stakeholders.

- Data Interpretation: Data analysis leads to findings, trends, patterns, and correlations. Interpreting these results in the context of the problem being addressed is crucial for making informed decisions.

- Decision Making: Based on the insights gained from data processing, organizations can make informed decisions to optimize processes, improve products, or enhance services.

- Data Storage: Processed data can be stored for future reference, analysis, and reporting. Proper data storage solutions ensure data security, accessibility, and compliance with regulations.

- Data Privacy and Security: Protecting sensitive and personal data is essential. Data processing should adhere to privacy regulations and security best practices to prevent unauthorized access or breaches.

- Real-time Processing: In some cases, data needs to be processed and acted upon in real time. This requires specialized techniques and technologies to handle data as it arrives.

- Batch Processing: Alternatively, data can be processed in batches, where data is collected over a period and processed together at specific intervals.

- Scalability: As data volumes grow, data processing systems should be scalable to handle increased loads efficiently.

- Cloud Computing: Cloud platforms offer scalable resources for data processing, storage, and analysis without the need for significant upfront infrastructure investment.

In essence, data processing is a comprehensive process that involves multiple stages, techniques, and considerations to derive valuable insights from raw data. It’s a fundamental aspect of modern business operations and scientific research.

Case study on Concept of data processing

Certainly! Let’s consider a case study that illustrates the concept of data processing in the context of a retail business.

Case Study: Optimizing Inventory Management Through Data Processing

Background: A retail chain operates several stores across different locations. The company wants to optimize its inventory management to reduce costs associated with overstocking and stockouts. They recognize that data processing can help them make informed decisions regarding inventory levels.

Data Collection: The company collects data from various sources, including point-of-sale systems in each store, supplier databases, and historical sales records. This data includes information about product sales, stock levels, reorder history, and customer preferences.

Data Processing Steps:

- Data Cleaning:

- Identify and remove duplicate or inconsistent entries.

- Handle missing data by either imputing values or making informed decisions about their impact on analysis.

- Data Transformation:

- Convert sales data into a consistent format.

- Aggregate sales data to different time intervals (daily, weekly, monthly) for analysis.

- Calculate relevant metrics such as average sales, sales growth rate, and seasonal trends.

- Data Analysis:

- Apply statistical analysis to identify patterns and trends in sales data.

- Use time series analysis to identify seasonality and forecast demand for different products.

- Analyze which products are top sellers and identify slow-moving items.

- Data Visualization:

- Create graphs and charts to visually represent sales trends and patterns.

- Generate dashboards that provide real-time insights into inventory levels, sales performance, and product popularity.

- Data Interpretation:

- Determine which products need to be replenished and which can be reduced to avoid overstocking.

- Identify optimal reorder points and order quantities based on historical data and demand forecasts.

- Decision Making:

- Adjust inventory levels based on insights gained from data analysis to minimize stockouts and excess inventory costs.

- Decide on promotional strategies for slow-moving products based on customer preferences and historical data.

- Data Storage:

- Store cleaned and processed data for future analysis and reference.

- Maintain an inventory database that updates in real time as sales occur.

Benefits:

- Cost Reduction: By optimizing inventory levels, the company reduces carrying costs associated with excess inventory and minimizes lost sales due to stockouts.

- Improved Customer Satisfaction: Avoiding stockouts ensures that customers can find the products they want, leading to higher customer satisfaction.

- Efficient Reordering: Data-driven reorder points and quantities lead to more efficient supply chain management and reduced manual decision-making.

- Personalized Marketing: By analyzing customer preferences, the company can tailor marketing strategies and promotions to specific customer segments.

- Data-Driven Strategy: The company can make strategic decisions backed by data, leading to better business outcomes.

This case study demonstrates how a retail business can leverage data processing to optimize inventory management, improve decision-making, and enhance overall operational efficiency.

White paper on Concept of data processing

Title: Understanding the Concept of Data Processing

Abstract: Data processing is a fundamental concept that lies at the heart of modern business operations, scientific research, and technological advancements. This white paper provides an in-depth exploration of the concept of data processing, covering its key stages, methods, significance, and real-world applications. By delving into the intricacies of data collection, cleaning, transformation, analysis, interpretation, and more, this paper aims to equip readers with a comprehensive understanding of how data processing contributes to informed decision-making and innovation across various domains.

Table of Contents:

- Introduction

- Definition and Importance of Data Processing

- Evolution of Data Processing in the Digital Age

- Key Stages of Data Processing

- Data Collection: Gathering Raw Information

- Data Cleaning: Ensuring Data Quality and Integrity

- Data Transformation: Converting and Standardizing Data

- Data Integration: Combining Data from Multiple Sources

- Data Aggregation: Summarizing and Condensing Data

- Data Analysis: Extracting Insights and Patterns

- Data Visualization: Communicating Insights Effectively

- Data Interpretation: Understanding Implications and Significance

- Decision Making: Using Processed Data to Inform Choices

- Methods and Techniques in Data Processing

- Statistical Analysis and Descriptive Statistics

- Data Mining and Machine Learning

- Time Series Analysis

- Predictive Modeling

- Natural Language Processing

- Image and Video Processing

- Applications of Data Processing

- Business and Finance

- Healthcare and Medicine

- Manufacturing and Supply Chain Management

- Scientific Research and Exploration

- Social Sciences and Market Research

- Environmental Monitoring and Analysis

- Challenges and Considerations

- Data Privacy and Security

- Handling Big Data

- Real-time vs. Batch Processing

- Ensuring Data Quality

- Technological Advancements and Trends

- Cloud Computing and Scalability

- Edge Computing for Real-time Processing

- AI-Powered Automation in Data Processing

- Integration of IoT and Data Processing

- Ethical Implications

- Ensuring Fairness and Bias-Free Analysis

- Transparent Data Processing Practices

- Consent and Privacy in Data Collection

- Conclusion

- Recap of Key Concepts

- Future Prospects of Data Processing

Conclusion: In a data-driven world, understanding the concept of data processing is essential for professionals across various domains. This white paper has provided a comprehensive overview of the stages, methods, applications, challenges, and ethical considerations related to data processing. As technology continues to evolve, data processing remains a cornerstone for making informed decisions, driving innovation, and unraveling the hidden insights within the vast troves of data that surround us. By grasping the intricacies of data processing, individuals and organizations can harness its power to shape a smarter and more informed future.